Building Scalable Applications: Lessons from the Field

In today's digital landscape, the ability to build applications that scale efficiently isn't just a competitive advantage—it's a necessity. As user bases grow exponentially and data volumes surge, the architectural decisions made in the early stages of development can mean the difference between a system that thrives under pressure and one that collapses at the worst possible moment.

Drawing from years of experience in the field and countless hours spent debugging production incidents at 3 AM, this article explores the fundamental principles, patterns, and practices that enable software applications to scale gracefully. Whether you're building a cryptocurrency trading platform, a financial services application, or any system that demands high availability and performance, these lessons apply universally.

The Foundation: Understanding Scale

Before diving into specific techniques, it's crucial to understand what we mean by "scale." Scalability isn't just about handling more users—it encompasses multiple dimensions including transaction volume, data storage requirements, computational complexity, and geographic distribution. A truly scalable system must address all these aspects while maintaining performance, reliability, and cost-effectiveness.

The Phantom Project team has consistently emphasized that scalability begins with measurement. You cannot optimize what you cannot measure. Implementing comprehensive monitoring and observability from day one provides the insights needed to make informed architectural decisions as your system grows.

Microservices Architecture: Breaking Down the Monolith

One of the most transformative architectural patterns in modern software development is the shift from monolithic applications to microservices. This approach involves decomposing a large application into smaller, independent services that communicate through well-defined APIs. Each microservice owns its domain logic and data, enabling teams to develop, deploy, and scale components independently.

"The key to successful microservices isn't just technical—it's organizational. Each service should align with a business capability and be owned by a team that can make decisions independently. This autonomy is what enables true scalability, both in terms of technology and team velocity."

However, microservices aren't a silver bullet. They introduce complexity in areas like distributed transactions, data consistency, and inter-service communication. The decision to adopt microservices should be driven by genuine scalability needs, not architectural trends. For many applications, a well-designed modular monolith can serve effectively until scale demands justify the additional complexity.

Service Communication Patterns

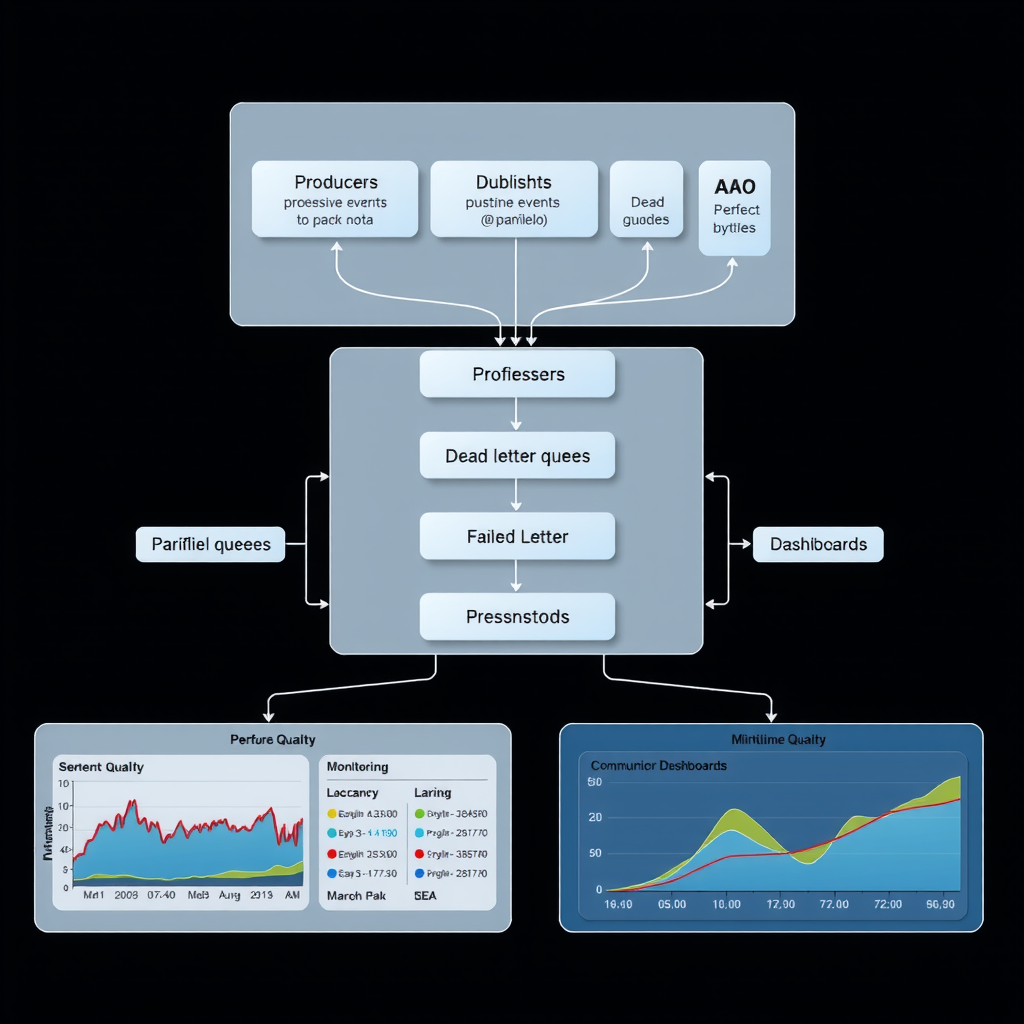

When services need to communicate, the choice between synchronous and asynchronous patterns significantly impacts scalability. RESTful APIs provide simplicity and are suitable for request-response scenarios, but they create tight coupling and can become bottlenecks under high load. Message queues and event-driven architectures offer better decoupling and resilience, allowing services to process requests asynchronously and handle traffic spikes gracefully.

Database Optimization: The Heart of Performance

Database performance often becomes the primary bottleneck in scaling applications. While application servers can be horizontally scaled relatively easily, databases present unique challenges. The strategies for database optimization fall into several categories, each addressing different aspects of the scalability problem.

Indexing Strategy

Proper indexing is fundamental to database performance. Every query pattern in your application should be supported by appropriate indexes. However, indexes aren't free—they consume storage space and slow down write operations. The art lies in finding the right balance. Composite indexes can support multiple query patterns, while partial indexes reduce overhead for large tables where only a subset of rows is frequently queried.

Regular analysis of query execution plans reveals opportunities for optimization. In production systems handling cryptocurrency transactions or financial data, even millisecond improvements in query performance can translate to significant competitive advantages and better user experience.

Read Replicas and Sharding

When a single database instance can no longer handle the load, horizontal scaling becomes necessary. Read replicas distribute read traffic across multiple database instances, dramatically improving read performance for read-heavy workloads. This pattern is particularly effective for applications where reads significantly outnumber writes, such as content platforms or analytics dashboards.

Sharding takes horizontal scaling further by partitioning data across multiple database instances. Each shard contains a subset of the data, allowing both reads and writes to be distributed. However, sharding introduces complexity in query routing, cross-shard transactions, and data rebalancing. The sharding key must be chosen carefully to ensure even distribution and minimize cross-shard queries.

Caching Strategies: Speed Through Intelligence

Caching is one of the most effective techniques for improving application performance and reducing database load. By storing frequently accessed data in memory, applications can serve requests orders of magnitude faster than querying the database. However, effective caching requires careful consideration of cache invalidation, consistency, and memory management.

Multi-tier caching strategies provide the best results. Browser caching reduces server load for static assets. Application-level caching with tools like Redis or Memcached accelerates dynamic content delivery. Database query result caching minimizes expensive computations. Content Delivery Networks (CDNs) distribute static content globally, reducing latency for users worldwide.

Cache invalidation remains one of the hardest problems in computer science. Time-based expiration works for data that changes predictably, but event-driven invalidation provides better consistency for critical data. In financial applications and cryptocurrency platforms, stale cache data can lead to incorrect trading decisions, making cache consistency paramount.

Load Balancing: Distributing the Burden

Load balancers sit at the front of your application infrastructure, distributing incoming requests across multiple application servers. This distribution serves multiple purposes: it prevents any single server from becoming overwhelmed, provides redundancy for high availability, and enables zero-downtime deployments through rolling updates.

Modern load balancers offer sophisticated routing algorithms beyond simple round-robin distribution. Least-connections routing directs traffic to the server handling the fewest active connections. IP hash routing ensures requests from the same client consistently reach the same server, useful for maintaining session state. Health checks automatically remove unhealthy servers from the pool, preventing requests from being routed to failing instances.

Application-Level Load Balancing

Beyond infrastructure load balancers, application-level load balancing provides fine-grained control over request routing. Service meshes like Istio enable sophisticated traffic management, including canary deployments, A/B testing, and circuit breaking. These capabilities are essential for maintaining system stability while continuously deploying new features in high-traffic environments.

Resilience Patterns: Failing Gracefully

Scalable systems must be resilient systems. As complexity increases with scale, the probability of component failures rises. Rather than trying to prevent all failures—an impossible goal—resilient architectures embrace failure and design systems that degrade gracefully when problems occur.

Circuit Breakers

Circuit breakers prevent cascading failures by detecting when a service is struggling and temporarily stopping requests to that service. When a service begins returning errors or timing out, the circuit breaker "opens," immediately returning errors without attempting the request. After a cooldown period, the circuit breaker enters a "half-open" state, allowing a limited number of requests through to test if the service has recovered.

Retry Logic and Backoff Strategies

Transient failures are common in distributed systems. Network hiccups, temporary resource exhaustion, and brief service interruptions happen regularly. Implementing intelligent retry logic with exponential backoff helps systems recover from these temporary issues without overwhelming struggling services with repeated requests.

However, retries must be implemented carefully. Aggressive retry policies can amplify problems, turning a minor slowdown into a complete outage. Adding jitter to backoff intervals prevents the "thundering herd" problem where many clients retry simultaneously, overwhelming the recovering service.

Monitoring and Observability: Seeing the Invisible

You cannot manage what you cannot see. Comprehensive monitoring and observability are non-negotiable for scalable systems. Traditional monitoring focuses on metrics—CPU usage, memory consumption, request rates, error rates. While essential, metrics alone don't tell the complete story.

Modern observability encompasses three pillars: metrics, logs, and traces. Distributed tracing follows requests as they flow through multiple services, revealing bottlenecks and dependencies that metrics alone cannot expose. Structured logging provides context for debugging issues in production. Together, these tools enable teams to understand system behavior, diagnose problems quickly, and make data-driven optimization decisions.

"In the Phantom Project ecosystem, we've learned that the best time to implement monitoring is before you need it. Trying to add observability during an outage is like trying to install a fire alarm while your house is burning."

The Human Factor: Organizational Scalability

Technical scalability means nothing if your organization cannot scale alongside your systems. As teams grow, communication overhead increases quadratically. Conway's Law states that organizations design systems that mirror their communication structures—a reality that must be embraced rather than fought.

Successful scaling requires clear ownership boundaries, well-defined interfaces between teams, and autonomous decision-making. Documentation becomes critical—not just technical documentation, but architectural decision records that explain why choices were made. This institutional knowledge prevents teams from repeatedly solving the same problems and enables new team members to contribute effectively.

Cost Optimization: Scaling Economically

Scalability isn't just about handling more load—it's about doing so cost-effectively. Cloud infrastructure provides virtually unlimited capacity, but costs can spiral out of control without careful management. Auto-scaling policies should balance performance requirements with cost constraints, scaling up to meet demand but scaling down during quiet periods.

Resource optimization goes beyond infrastructure. Efficient algorithms reduce computational requirements. Proper database indexing decreases query execution time and resource consumption. Caching reduces database load and network traffic. These optimizations compound, enabling systems to handle significantly more load with the same infrastructure investment.

Security at Scale

As systems scale, the attack surface grows. More services mean more potential vulnerabilities. More data means higher stakes if security is breached. Security cannot be an afterthought—it must be built into every layer of the architecture from the beginning.

Defense in depth provides multiple layers of security. Network segmentation limits the blast radius of breaches. Encryption protects data in transit and at rest. Authentication and authorization mechanisms ensure only legitimate users access sensitive resources. Rate limiting and DDoS protection prevent abuse. Regular security audits and penetration testing identify vulnerabilities before attackers do.

For applications handling financial transactions, cryptocurrency trading, or sensitive user data, security isn't just about protecting assets—it's about maintaining trust. A single security breach can destroy years of reputation building and user confidence.

Continuous Evolution: The Journey Never Ends

Building scalable applications isn't a destination—it's a continuous journey. Requirements change, traffic patterns evolve, new technologies emerge, and yesterday's solutions become tomorrow's bottlenecks. Successful teams embrace this reality, building systems that can evolve incrementally rather than requiring complete rewrites.

Regular architecture reviews identify areas for improvement before they become critical problems. Performance testing under realistic load conditions reveals bottlenecks early. Chaos engineering—deliberately introducing failures in controlled environments—builds confidence in system resilience and reveals weaknesses in failure handling.

The lessons shared in this article come from real-world experience building and scaling systems that handle millions of transactions, serve users globally, and operate with high availability requirements. Whether you're building the next generation of cryptocurrency platforms, financial technology solutions, or any application that demands scale, these principles provide a foundation for success.

Key Takeaways

- Scalability is multidimensional—consider performance, reliability, cost, and organizational factors

- Microservices enable independent scaling but introduce complexity that must be justified

- Database optimization through indexing, replication, and sharding is critical for performance

- Intelligent caching strategies dramatically reduce load and improve response times

- Resilience patterns like circuit breakers and retry logic prevent cascading failures

- Comprehensive monitoring and observability enable data-driven optimization decisions

- Security must be built into every layer from the beginning, not added later

- Continuous evolution and regular architecture reviews keep systems healthy as requirements change

The path to building truly scalable applications requires technical expertise, architectural discipline, and organizational maturity. It demands careful planning, continuous learning, and the humility to recognize that no system is perfect. But with the right principles, patterns, and practices, you can build systems that not only handle today's load but are ready for tomorrow's challenges.

At Phantom Project, we continue to push the boundaries of what's possible in scalable system design, applying these lessons to build the next generation of digital solutions. The journey of scaling never truly ends—it evolves with technology, user needs, and business requirements. Embrace the challenge, learn from failures, celebrate successes, and keep building systems that make a difference.